What if prompts worked like real code?

$10 billion?

Would be cheaper to make it out of flatpack and call it Billy Bitskåp.

PODCAST

Getting AI Apps Past the Demo

Eight open tabs of LangChain docs, flaky prompt outputs, unrealistic deadlines, lack of coffee... there are so many reasons AI apps don’t make it to production.

Vaibhav told me how BAML helps with (at least) a couple of those by treating prompts more like real code: structured, testable, and easier to debug. That shift helps teams build more reliable systems without killing iteration speed.

It’s built to support smoother workflows through:

Live previews – watch prompts update as you write

Instant test runs – check outputs without leaving your editor

Debuggable views – token-level highlights and prompt diffs

I'll give you a prompt to click below to listen.

UPCOMING VIRTUAL CONFERENCE

GenAI in Games, 3D & VFX

June 10 – 10:00–11:30 AM PDT / 19:00–20:30 CEST

MLOps Community and Tulip are hosting a live event with studios and builders using GenAI in production to reshape workflows across pre-vis, camera work, mesh generation, and more.

Talks cover:

Fine-tuning small models and running agent-based systems in creative pipelines

AI-assisted animation: from custom keyframes to full motion

Legal Q&A on IP, contracts, and the risks of AI-generated content

Real-world R&D wins (and friction) from production teams

Not your usual use case roundup - but packed with lessons about building GenAI tooling and navigating deployment in complex pipelines.

PODCAST

Product Metrics are LLM Evals

Evals can be humbling - a bit like thinking you’re nailing Duolingo, then your six-year-old casually switches languages and leaves you behind.

Raza argued that the best evals are just product metrics in disguise - what really matters is whether the user achieved their goal. In practice, that means building evals around real behavior, not just static labels.

For example, teams are using:

Behavior-driven signals: Tracking if users copy text, edit it, or follow up with an action

Prompt iteration workflows: Letting domain experts tweak prompts without redeploying code

CI-integrated evals: Automatically gating releases based on test case performance

I'm sure this episode will match your eval expectations when you click below and listen.

WORLD TOUR - NEXT STOPS

The lowest budget, highest signal AI Agent non-conference conference

San Francisco - Done!

The tour continues:

Still more dates to be announced, and if you want to get involved, just let us know.

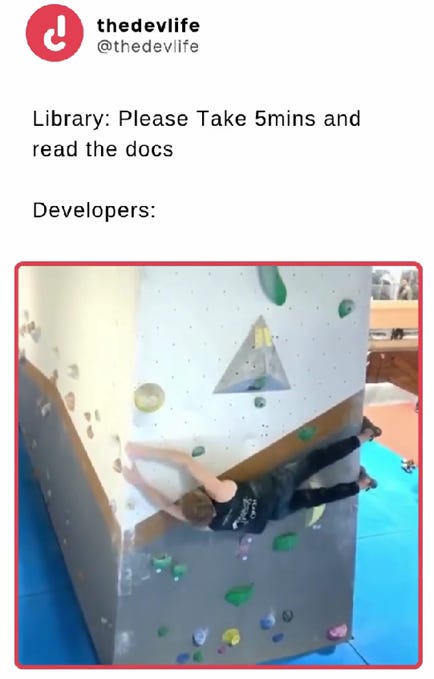

MEME OF THE WEEK

ML CONFESSIONS

So I was dealing with this bug in our explainability dashboard the other day. You know how it is with these compliance features - nobody really looks at them until there's a problem, and then suddenly everyone cares.

We'd been using SHAP for feature attribution, but something was clearly off. The plots looked completely wrong - like, the top features made zero sense. Of course, one of the senior folks noticed and called it out in front of everyone. "These don't look right," they said. Which... yeah, no kidding. Great timing, right?

I dug into it and found the issue pretty quickly. We had this mess with categorical variables where the one-hot encoding wasn't consistent between training and inference. Classic mistake, totally my fault, but also a real pain to fix properly. And I was already drowning in this other model migration project.

So here's where it gets bad. Instead of doing the right thing and fixing the encoding issue, I just... flipped the SHAP values. Literally just multiplied everything by -1. And you know what? Suddenly the plots looked "intuitive." People were nodding along, saying it matched their domain knowledge.

We pushed it to production.

That hack is still running in prod today. It shows up in audit presentations. I've started calling it a "directional reinterpretation" when people ask about the methodology.

But yeah, the signs are all backwards. On purpose. And I'm the only one who knows.

Submit your confessions here.

BLOG

Prompt Deployment Goes Wrong: xAI Grok's obsession with White Genocide

With his black eye and K-hole trance perfectly normal behaviour, Musk’s latest Oval Office drop-in seemed to mirror xAI’s recent state.

A late prompt edit caused Grok to inject political framing into neutral queries - something better ops could have flagged. The post makes a case for treating prompts like code: versioned, tested, and cautiously deployed. It adds:

Progressive rollouts: Shadow and canary tests to catch regressions early.

Beyond human feedback: Include automated and long-term reliability checks.

Prompt changes as high-risk deploys: They need real safeguards.

Save yourself slipping into an avoidable hole and read the blog instead.

HIDDEN GEMS

An open-source GitHub project offering a SQL-based workflow engine for API integrations, designed to help data teams replace SaaS connectors with version-controlled, YAML-defined pipelines that support dynamic logic, HTTP calls, and database syncs.

A chance to have your say in the official 2025 Stack Overflow Developer Survey, which gathers insights on the tools, languages, and trends shaping the software industry, with results widely used by teams, hiring managers, and tech platforms alike.

An open-source cognitive architecture prototype built in Rust, exploring neural-symbolic integration for general intelligence through modular components like perception, memory, and reasoning.

An interactive site showcasing how popular vision-language models reproduce social stereotypes, with visual examples and tests that highlight underlying bias patterns across image-text pairings.