Context Is the Real Stack

Plus free GPU guide, awards, why Agentic RAG reduces hallucinations, how Da2a rethinks data platforms, and what Uber learned about real-time search

In the holiday spirit, I’m sharing our free 2026 GPU Buyer’s Guide.

After six months researching the neo-cloud landscape, I put together a guide to demystify hardware, pricing, and cold starts. It’s a practitioner-focused resource, built with Community input, answering the questions your AI tools can’t.

Enjoy your holidays, and your free GPU guide.

HOT TAKE

The RAG Hallucination

RAG didn’t fix hallucinations. It made them harder to notice.

When something breaks, do you inspect retrieval or ship another prompt?

LAST WEEK’S TAKE

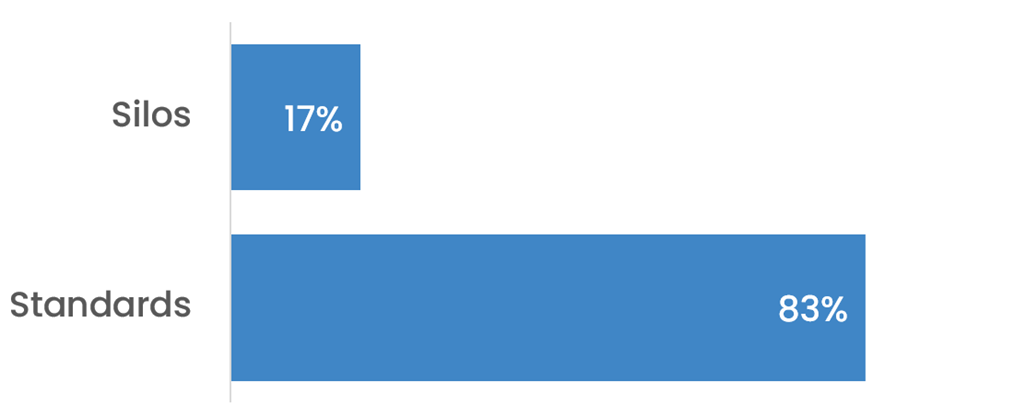

You’ve Got Standards

The majority of you see standards as the way to fix fragmentation.

COMMUNITY AWARDS 2025

The OpsCARS 2025

We’re wrapping up 2025 with The OpsCARS - our end-of-year awards, picked by you.

Some categories are serious (Best Original Research, Most Interesting OSS Project). Some are less so (Most Overhyped Term, Most Unhinged Model Response). All of them reflect what shaped the year, for better or worse.

Voting is open now. Cast yours and help recognize the people, projects, and moments that defined the year.

Vote now

HIDDEN GEMS

Curated finds to help you stay ahead

Real-time delivery search at Uber, covering architecture, indexing, ranking, and experimentation patterns used to balance latency, relevance, and rapid menu churn at scale.

Running 1,000+ automated ML experiments using Claude Code and a shared skills registry to capture results, generate scripts, and reuse collective learnings.

Real-time search, ranking, and recommendation systems through an API-first platform connecting data sources, training models, and serving results via simple query and SDK interfaces.

Tools and workflows from Apple for training, fine-tuning, and evaluating LLMs, with an emphasis on efficiency, reproducibility, and safety-oriented evaluation.

Bonus holiday playlist gems

A curated set of talks, podcasts, and keynotes covering context engineering in practice, from agent design and long-term memory to RAG, tooling, and real production lessons from teams at LangChain, IBM, YC, and MLOps Community.

A collection of videos focused on testing agents before release, covering simulation-driven evaluation, reliability failures, voice agents, RL-based training, and lessons from production teams building systems that break safely long before users ever see them.

Where agents stop being chatbots and start doing work. Talks and workshops on tool calling and MCP, spanning agent-tool contracts, production failures, enterprise constraints, and real implementations from Anthropic, OpenAI, and teams shipping agents in practice.

Job of the week

Senior MLOps Engineer // Lore (Remote, US)

Lore is hiring a senior MLOps engineer to design and operate production ML systems end to end. The role focuses on pipelines, infrastructure, deployment, and observability, working closely with small squads owning systems in production.

Responsibilities

Build and maintain end-to-end ML pipelines in production

Automate training, deployment, and monitoring workflows

Implement model observability, traceability, and reproducibility practices

Collaborate with squads on infra and production ML decisions

Requirements

8+ years experience running ML systems in production

Strong experience with GCP-based ML infrastructure

Hands-on use of MLflow, CI/CD, and orchestration tools

Experience deploying models via APIs using FastAPI

MLOPS COMMUNITY

Context Engineering 2.0

Production agents don’t usually fail because the model is dumb - they fail because the system can’t feed them the right context fast, safely, and cheaply. This conversation argues the next bottleneck is “context engineering,” not prompts.

Where MLOps still pays for itself: high-scale, direct-to-money systems like recommenders and fraud, where feature stores keep getting rebuilt because in-house maintenance hurts.

Context as a unified layer: treat unstructured docs, memory, and structured data as one “context surface,” with relationships between them - instead of three separate pipelines that never agree.

MCP as org scaling, not hype: moving tools into servers lets teams evolve context endpoints independently from agent workflows, like microservices for data access.

Tie it together and you get the punchline: the winners won’t be the teams with the fanciest agent - they’ll be the ones who control context end-to-end.

Does Agentic RAG Really Work?

Agents don’t hallucinate because the model is careless - they hallucinate because the prompt is wrong. This discussion breaks down why the real leverage sits in how prompts are built dynamically, not statically.

RAG’s ceiling: grounding helps, but generic RAG collapses under ambiguity, stale data, and risky actions like SQL generation against production systems.

Agentic RAG as separation of concerns: split work into narrowly scoped agents with their own context, data access, and guardrails, closer to microservices than monoliths.

Dynamic prompts as the control plane: dense embeddings over schemas and docs drive context-aware prompts that guide the LLM, rather than letting it guess joins, metrics, or intent.

The payoff is simple: fewer hallucinations, safer systems, and agents that answer the question you meant to ask.

Da2a: The Future of Data Platforms is Agentic, Distributed, and Collaborative

Waiting days for a simple metric is a clear sign of a broken data platform. This post argues for flipping the stack: instead of one monolithic “source of truth,” run multiple domain agents (sales, marketing, ecommerce) and let an orchestrator stitch answers together.

Shift the bottleneck: move from centralized pipelines and dashboards to domain-owned agents that answer questions directly against their own data.

How Da2a works: a root orchestrator calls remote specialist agents via “agent cards” over an A2A protocol, treating agents as tools.

What breaks next: payload size (A2A is for small JSON/text), agent discovery (hardcoded today), and memory (stateless agents need learning).

If this model holds, the next data platform upgrade is organizational - not another warehouse migration.

MEME OF THE WEEK

ML CONFESSIONS

A Very Silent Night

T’was the week before Christmas, and all through the stack,

Not a pipeline was running, every job rolling back.

The cron had gone quiet, the alerts didn’t scream,

Because someone muted Slack during load test fifteen.

A hotfix on Friday, just one harmless refactor,

Changed a column name, broke every downstream factor.

By Monday it surfaced, metrics frozen in time.

Yeah, that’s on me. CI said “looks fine”.

Share your confession here.

Really strong framing on RAG. The insight about hallucinations getting harder to notice resonates — we saw this when our retrival was pulling slightly outdated docs and nobody caught it for weeks because the model confidently synthesized them. The shift you're describing from prompt-first to context-enginering makes sense, especially the unified layer approach. Kinda wish more teams treated context like infra insteadof an afterthought.