Code, Calls, and Cash Multipliers

Plus, NotebookLM video workflows, Anthropic’s bug lessons, and Google’s design playbook

It’s not a case of if Alibaba catches up, but Qwen.

HOT TAKE

Trust on Credit

Latency is a tax on trust - the faster you respond, the less patience you buy.

What do you invest in:

LAST WEEK’S TAKE

Smokin’

Last week’s vote shows you think smoke tests are lit.

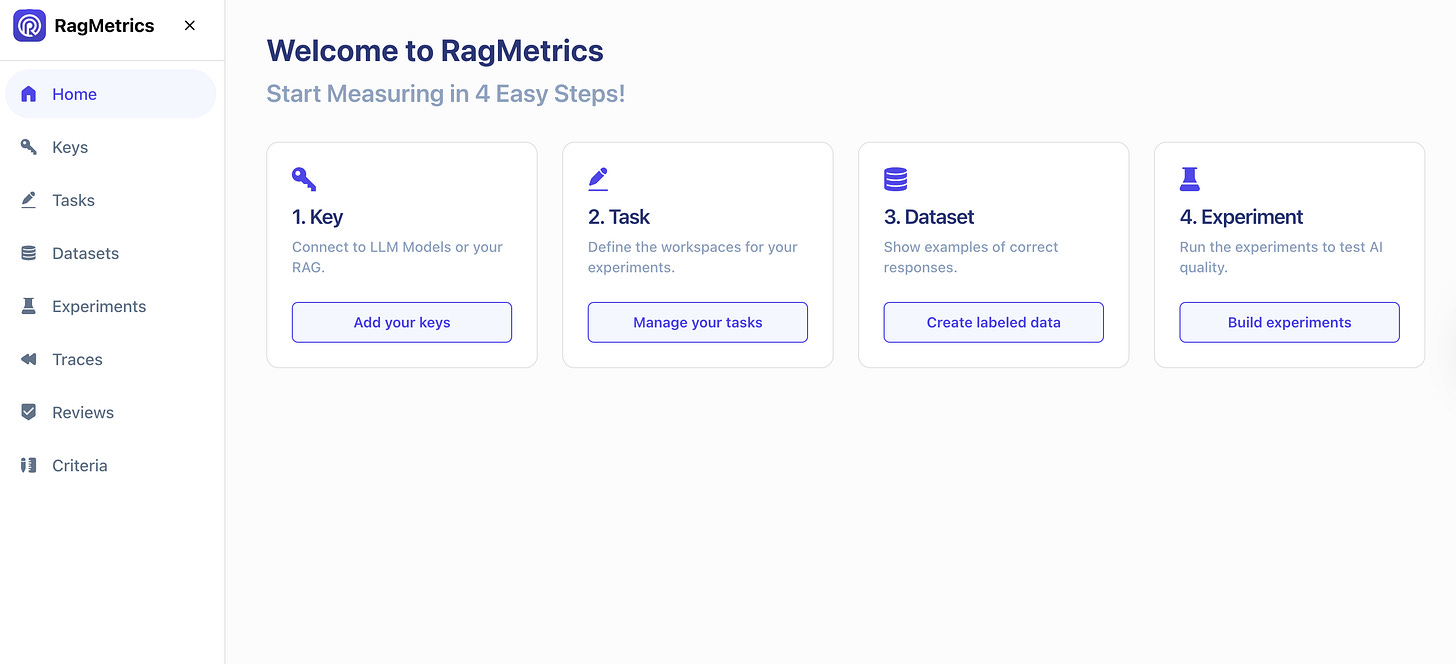

PRESENTED BY RAGMETRICS

Stop Guessing. Start Testing GenAI with RagMetrics

Manual reviews don’t scale—but manual QA remains the norm for many GenAI systems. RagMetrics changes that. We’re the QA and monitoring layer for Generative AI, helping teams replace slow, inconsistent spot-checks with automated evaluation and production observability.

With RagMetrics, teams can:

Catch hallucinations before they impact users

Score performance using a library of 200+ metrics

Monitor for drift and regressions in live systems

Define unlimited custom metrics specific to their domain

Designed for startup and enterprise adoption alike, RagMetrics ensures GenAI outputs are trustworthy by default.

HIDDEN GEMS

Curated finds to help you stay ahead

The Oracle–OpenAI mega-contract, unpacked by the creator of Kubeflow, highlights how data prep and failed runs dominate costs far more than GPU spend in large-scale AI projects.

Kaggle Grandmasters’ playbook outlines seven proven techniques for tabular data, from advanced ensembling to feature engineering, distilled from years of high-level competition.

Anthropic bug post-mortem describes three infrastructure bugs that caused Claude’s response quality to drop, explains the delays in spotting them, and outlines changes to avoid similar issues.

Inside Google’s NotebookLM design shows how a three-panel “Inputs → Chat → Outputs” flow, rapid prototyping, and adaptive layouts shaped the product’s interface and user experience.

Job of the week

Senior Machine Learning Engineer // Veho (Remote)

Veho is a logistics company that uses technology to improve delivery experiences for businesses and customers. This role focuses on building and maintaining ML infrastructure, supporting production models, and enabling scalable systems for forecasting and orchestration.

Responsibilities

Develop scalable infrastructure for ML deployment and monitoring

Build robust pipelines and feature stores for model workflows

Create tools for experimentation, orchestration, and performance tracking

Collaborate with data scientists on production model ownership

Requirements

5+ years experience in ML engineering or MLOps

Strong skills in building scalable ML systems and pipelines

Proficiency with distributed frameworks such as Ray or Flink

Experience deploying and monitoring ML models in production

Find more roles on our new jobs board - and if you want to post a role, get in touch.

MLOPS COMMUNITY

How LiveKit Became An AI Company By Accident

A billion-dollar swing in valuation can hinge on a single demo. That’s what Russ Dsa found when a cloud giant told him LiveKit was worth $20M - unless he tied it to GenAI, then it’d be $200M. Here’s how LiveKit went from pandemic video infra to powering OpenAI’s Voice Mode.

Why WebRTC was the hidden backbone of pandemic-era apps - and how LiveKit made it accessible to every developer

The scrappy voice interface demo that OpenAI quietly discovered and turned into production

Why voice AI is harder than text, from latency thresholds to avoiding the uncanny valley

It’s a crash course in how infrastructure pivots can reshape the AI stack overnight.

Vibe Youtubing with NotebookLM

Imagine converting a full coding course into polished video content in just two days. That’s what happened when NotebookLM’s Video Overview was dropped into an existing MLOps curriculum.

NotebookLM ingested Markdown + Python notes and spun out video modules in minutes

The workflow shifted from painstaking scripting and editing to refining AI-generated drafts

Structured inputs (code, docs) make AI video production not just possible, but practical

The result: serious technical education upgraded at a fraction of the usual effort.

IN PERSON EVENTS

MEME OF THE WEEK

ML CONFESSIONS

The 2030 Phone

We had a feature that calculated “session length” from client timestamps to feed into our anomaly detector. Locally, I tested it on a clean sample and everything looked fine.

In prod the logs came from lots of different customers and devices, and some of those devices send timestamps in a slightly different format (and some phones had the clock set wrong). My parser silently accepted the weird formats but parsed them incorrectly, so a handful of sessions ended up with negative durations like -12,345 seconds. The downstream model saw those huge negative numbers and decided those sessions were ultra-suspicious. Overnight we got alerts for an account that, in reality, was just a phone from a user whose clock was set to 2030.

The support team called it “time travel fraud” for a while. I had to explain in the postmortem that we hadn’t discovered a sci-fi attack vector - just messy timestamps.

Share your confession here.