Claude vs. Pikachu: What It Taught Us About LLMs

Should’ve seen the reaction from the gym bros when I told them what I'm benching.

PODCAST

Claude Plays Pokémon - A Conversation with the Creator

All work and no play apparently applies to models too.

David built Claude Plays Pokémon as a way to test long-horizon coherence and agent behaviour. It uses screenshots, metadata, and a simple toolset to move through the game – and became a surprisingly useful benchmark as models improved.

We also got into when fine-tuning is actually useful, and why prompting or RAG usually get you further, faster:

Prompting covers most needs if pushed properly

RAG adds knowledge without complexity

Fine-tuning is costly and rarely worth it unless precision or scale demand it

Click below to listen and prevent your model having a Jack Nicholson Shining moment.

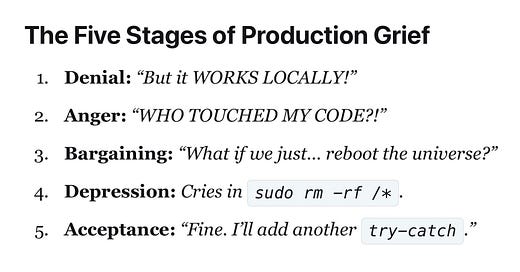

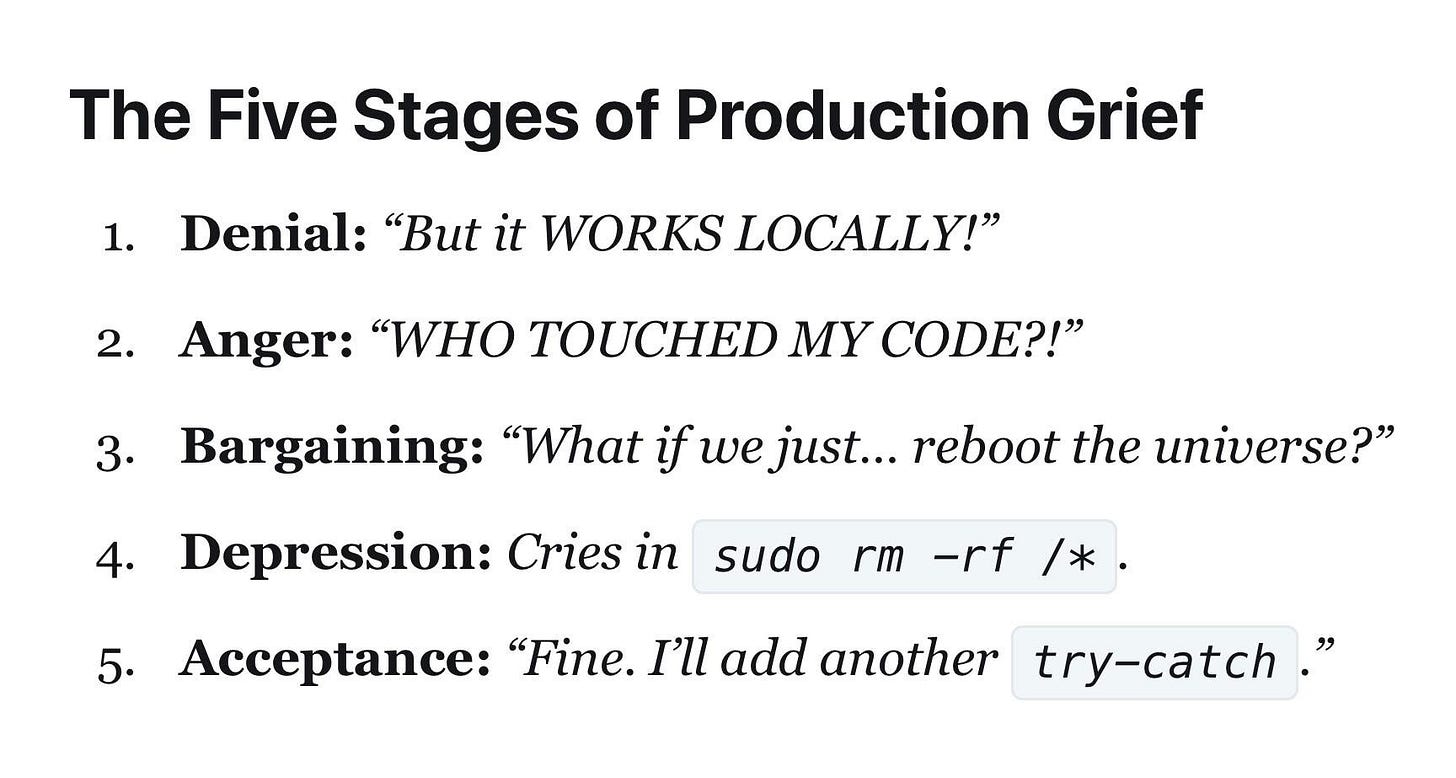

MEME OF THE WEEK

PODCAST

Building Trust Through Technology: Responsible AI in Practice

I appreciate 'responsible' might not be the first word you associate with me, but don't let that put you off this episode.

Allegra talked about how engineers are often expected to carry the weight of building 'responsible AI' systems, despite a lack of clear definitions or leadership support. Having clarity on intent, involving diverse perspectives early, and codifying guiding principles into technical requirements can make things more concrete.

We also spent time on “perspective density” – the importance of having more varied voices in the room:

It shapes better questions

Highlights unseen risks

Leads to more robust systems

Grab a coffee, hit play, and drink responsibly.

ML CONFESSIONS

Our chatbot’s sentiment analysis was a mess—everything came back neutral, whether the user typed “I love this!” or “I just stepped on a Lego.” After too many late nights debugging the pipeline, I cracked and added a rule: if there’s an emoji, bump the sentiment to positive.

It worked.

Suddenly, every reply had that chipper, vaguely-too-enthusiastic tone the client adored.

“Emoji = joy” quietly became core logic in our production system.

Months later, we got recognition for “advancing emotional intelligence in conversational AI.” I kept a straight face through the whole ceremony.

Find the inner peace you deserve and confess here.

BLOG

More Automation + More Reproducibility = MLOps Python Package v4.1.0

Like that half-court buzzer beater you somehow sank in high school, some things just aren’t reproducible.

Your build doesn’t have to be one of them. v4.1.0 of the MLOps Python Package improves reproducibility and automation with a switch from PyInvoke to Just, offering cleaner task definitions for linting, testing, packaging, and more. Builds are now fully deterministic using constraints.txt compiled with uv , locking dependency versions to avoid CI inconsistencies.

The new packaging flow uses just to:

Generate

constraints.txtwith exact hashesBuild wheels with

--require-hashesenforcementEnsure repeatable, secure installs across environments

Other updates include Gemini Code Assist for PR review and automatic GitHub ruleset enforcement.

Give it a read to make sure your build's a slam dunk every time.

HIDDEN GEMS

A hacker’s methodical approach to probing LLM applications for vulnerabilities, with a focus on red-teaming techniques, injection points, and how current defenses stack up against creative misuse.

A GitHub repository presenting GPUStack, an open-source GPU cluster manager designed for deploying and managing AI models across diverse hardware environments, including various GPU brands on macOS, Windows, and Linux systems.

A research paper introducing R1-Omni, an omni-multimodal LLM enhanced with Reinforcement Learning with Verifiable Reward (RLVR), aimed at improving emotion recognition by integrating visual and audio modalities.

End Of The Line // Gem // Song

An API reference from Atla AI outlining endpoints for evaluating LLM interactions and integrating structured model feedback into workflows.

BLOG

Is Your Chatbot Secure?

Your $1 Chevrolet shows you know how to spot an insecure chatbot when you see one – but how do you make sure your own chatbot’s not next?

This post explains how to build secure RAG systems by combining Realm’s permission-aware data connectors with ApertureDB’s graph-vector database. It tackles key enterprise challenges like syncing fast-changing permissions and enforcing fine-grained access control at query time.

Rather than relying on flat ACLs, ApertureDB models access paths as graph relationships:

Users, groups, and files are nodes, with edges showing permissions and membership

Access checks traverse the graph to validate whether a user can retrieve a chunk

Updates are efficient, needing only local edge changes when permissions shift

Have a read to make sure your chatbot isn't the next newsletter punchline.